Multimodal Vision Research Laboratory

MVRL

multitemporal background models

Abstract

In surveillance applications there may be multiple timescales at which it is important to monitor a scene. This work develops online, real-time algorithms that maintain background models simultaneously at many timescales. This creates a novel temporal decomposition of video sequence which can be used as a visualization tool for a human operator or an adaptive background model for classical anomaly detection and tracking algorithms. This paper solves the design problem for choosing appropriate timescales for the decomposition and derives the equations to approximately reconstruct the original video given only the temporal decomposition. We present two applications that highlight the potential of video processing; first a visualization tool that summarizes recent video behavior for a human operator in a single image, and second a pre-processing tool to detect "left bags" in the challenging PETS 2006 dataset which includes many occlusions of the left bag by pedestrians.

Citation

Jacobs, N. Pless, R. (2006). Real-time Constant Memory Visual Summaries for Surveillance. In ACM International Workshop on Visual Surveillance and Sensor Networks (VSSN).See here for additional details.

Approach

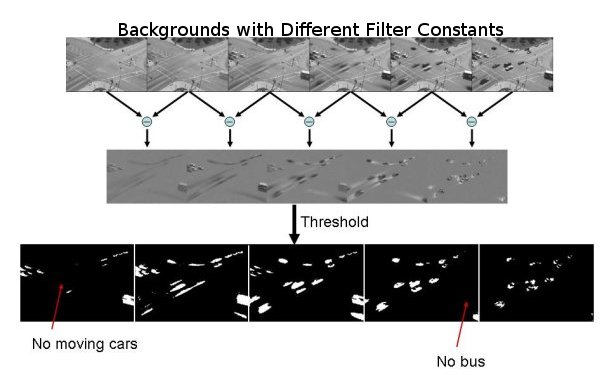

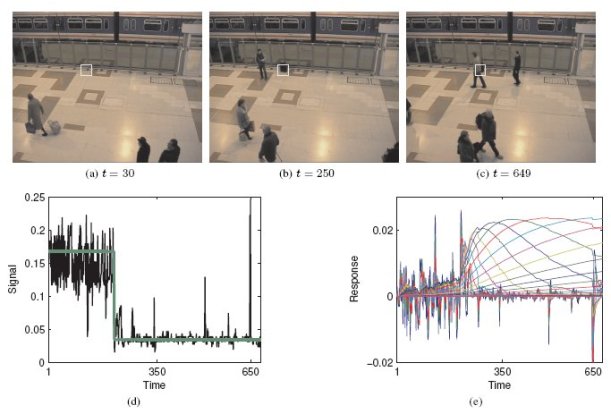

We maintain background models at different timescales, i.e., with different filtering constants, and directly compare these background models. By comparing models we can estimate how long an object has been at its current location.

Results

Using this method we can highlight objects that have been in the scene for different timescales. The videos below show two methods of visualizing this information: one is a false-color sequence and the other is a filtered version of the original video.

- Bus Video (wmv) - video showing traffic intersection, a false-color version of the scene (colored by when the pixel last changed), and a visualization that filters objects from the scene based on this false color image.

- "Left Bag" Video (wmv) - a video from the PETS 2006 dataset highlighting a "left bag".